BPS Tracker

Bull Put Spread 持倉管理,一目瞭然

專為選擇權交易者設計的持倉追蹤工具。即時監控 Greeks、風險評估、獲利追蹤,讓每一筆交易都在掌握之中。

核心功能

核心功能

管理 Bull Put Spread 持倉所需的一切

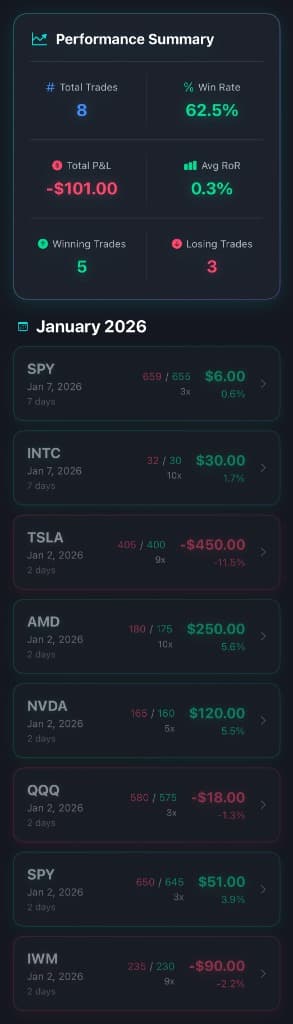

持倉總覽

一眼掌握所有持倉的盈虧狀態、勝率與平均報酬

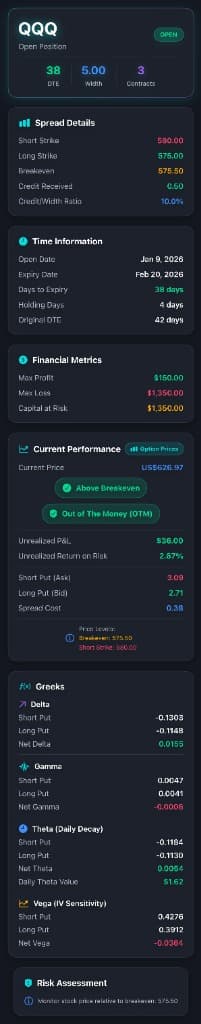

Δ

Greeks 分析

Delta、Gamma、Theta、Vega 完整呈現,掌握風險敞口

風險評估

即時計算最大損失、損益平衡點與安全邊際

到期追蹤

DTE 倒數、持有天數一目瞭然,不錯過任何平倉時機

績效記錄

完整的已平倉記錄,計算實現報酬與年化收益率

直覺介面

深色主題、清晰配色,專為交易者設計的視覺體驗

App 畫面

App 畫面

看看 BPS Tracker 如何幫助你管理持倉

績效總覽 — 勝率、盈虧、報酬率一目瞭然

持倉詳情 — 完整 Greeks 與風險評估

平倉記錄 — 追蹤每筆交易的實際績效

持倉詳情 — 完整 Greeks 與風險評估

← 左右切換截圖 · 上下滾動查看細節 →

如何使用

如何使用

三個簡單步驟掌握你的 Bull Put Spread 持倉

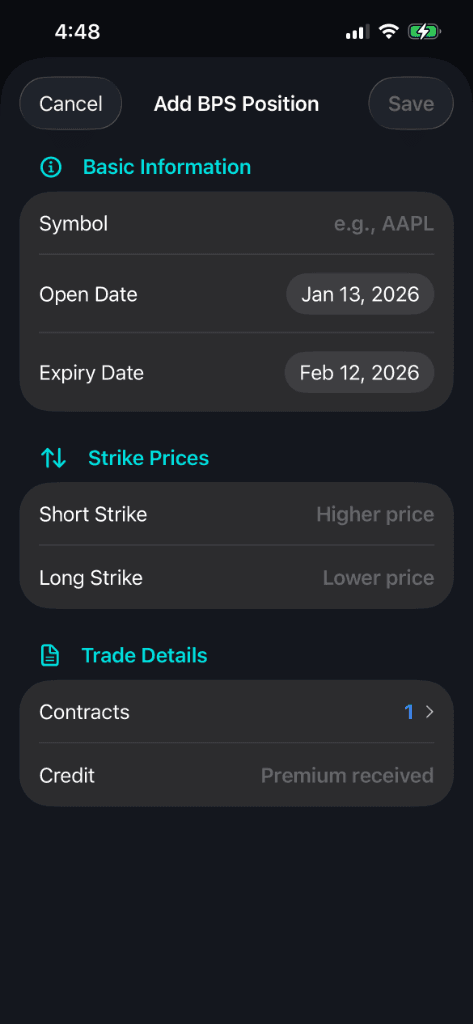

Step01

輸入持倉

輸入你的 Bull Put Spread 資訊:標的、履約價、到期日、口數

SymbolStrike PricesExpiry Date

Step02

即時追蹤

App 自動計算 Greeks、盈虧與風險指標,隨時掌握部位狀態

Win RateTotal P&LAvg RoR

Step03

管理平倉

記錄平倉時機與結果,累積完整的交易績效歷史

GreeksRisk MetricsP&L Analysis

Ready to start tracking

Coming 2025

即將登陸 App Store

Download on the

App Store

Coming 2025

status:in_development